camera:realsense(D450)深度对齐优化

本文采用知识共享署名 4.0 国际许可协议进行许可,转载时请注明原文链接,图片在使用时请保留全部内容,可适当缩放并在引用处附上图片所在的文章链接。

SDK 中的对齐实现

align_z_to_other

|

|

align_images

|

|

目的:将深度坐标系下的点转换到彩色坐标系下

基本步骤:

(1)将深度图的像素点还原到深度坐标系下

(2)深度坐标系下的深度点还原到世界坐标系

(3)世界坐标系的深度点转换到彩色坐标系下

(4)彩色坐标系的深度点映射到Z=1的平面上,即与彩色图像的像素点对应起来

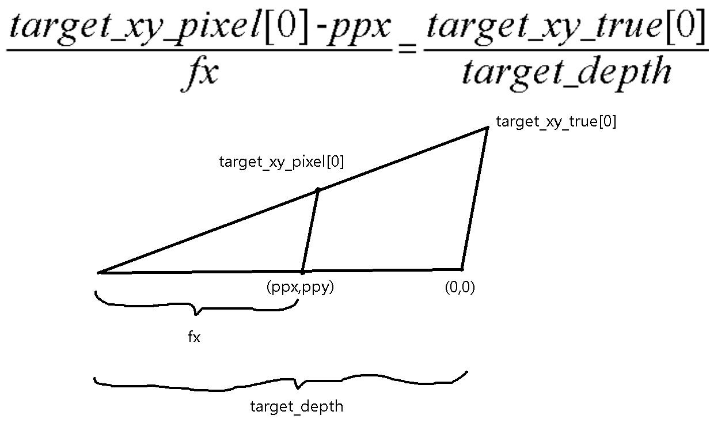

由目标像素坐标和深度值(使用内参INTRINSICS)获取目标点的真实坐标原理:

|

|

More efficient way of aligning images

Align to Depth produces lossy color image

转化为opencv Mat格式

转化为opencv 格式的相机内参矩阵和畸变矩阵

|

|

相机的旋转向量和平移向量

|

|

|

|

|

|

|

|

|

|

|

|

realsense 对齐流程

|

|

|

|

|

|

|

|

|

|

points1 ,points2:

homography : [0.9783506973455497, -0.003518269926918195, 14.96135699407099; -0.0004602924762779034, 0.9741223762143064, 16.69589351807111; 6.57807138529927e-07, -7.03827767458266e-06, 1]

points2 , points1 :

homography : [1.021281290759013, 0.002739759630768195, -14.96440533526881; 0.0004858818482422798, 1.025665029417736, -17.1042255312311; -1.068779611668291e-06, 5.619754840876425e-06, 1]

https://github.com/mickeyouyou/realsenseOnCyber/blob/master/realsense_component.cc

https://blog.csdn.net/dieju8330/article/details/85346454

https://docs.opencv.org/3.4/d9/d0c/group__calib3d.html#ga617b1685d4059c6040827800e72ad2b6

https://github.com/IntelRealSense/librealsense/issues/1189

rs2_deproject_pixel_to_point rs2_transform_point_to_point rs2_project_point_to_pixel